![]() In an episode of the TV series The Sopranos, Carmela Soprano discovers that she is unable to retain a competent lawyer for her divorce proceedings, apparently due to her husband Tony having contacted a number of them in advance, ostensibly for advice on unrelated matters. In so doing, Tony deviously “polluted the attorneys” by creating a conflict of interest for them.

In an episode of the TV series The Sopranos, Carmela Soprano discovers that she is unable to retain a competent lawyer for her divorce proceedings, apparently due to her husband Tony having contacted a number of them in advance, ostensibly for advice on unrelated matters. In so doing, Tony deviously “polluted the attorneys” by creating a conflict of interest for them.

It would seem that some law firms may be incorporating this questionable practice into their strategy of obtaining subject matter experts and expert witnesses for high-profile litigation. Apparently, their strategy involves interviewing a large number of known experts, through a broker, requiring the candidates to agree to non-disclosure during the initial email contacts, and then rejecting them as candidates, often cutting off all further communications.

By doing this, the experts are then precluded from being retained by the opposing party, due to a “restrictive covenant” in the original email communication, with no confidential information having been exchanged, and with no compensation for their acquiescence. Essentially, the expert has provided value to the broker and law firm (exclusivity), in return for nothing.

I can describe my own experience in these matters. I was approached to consult as a subject matter expert for a number of very-high profile cases, and each time, I was directed to agree to a “confidentiality agreement” before I was interviewed for suitability. Objectively, it appeared that I was one of very few people who was an extremely good match to a very specific — and narrow — set of experience requirements. Nonetheless, I was rejected, after responding to an email containing a restrictive covenant, with no further communication at all.

Lest the reader conclude that I simply was rejected as non-qualified, I can point to the fact that, in several instances, all communication ceased after I solicited a sample NDA, without having mentioned compensation or any other conditions. It would seem there is something unreasonable going on here.

The solution to expert-pool contamination requires a willingness by all parties to accept responsibility.

- Experts: When considering retention as a subject matter expert, insist on a written NDA, and consider one whose execution requires an initial non-refundable retention payment to you (you can always apply it to the initial consulting time, if retained). Be sure to scrutinize the language of the initial contact emails, and specifically disagree with any language that requires confidentiality without a formal agreement.

- Law firms: First of all, refrain from this behavior, which is at best unethical. Considering the fact that you may be on the losing end of such a situation, it’s in the best interest of the profession as a whole to avoid a “mutually assured destruction” mindset. Look to include, rather than exclude, talent. It’s not too expensive to build a contingent of experts on a retainer, so that you can pick and choose the right one as needed; plus, it’s insurance against illness and other contingencies. Employers customarily reimburse candidates for travel expenses to a job interview, so it should not be considered unusual to compensate an expert at the very least for a telephone (or in-person) interview, especially if it’s in return for exclusivity.

- Brokers: Your biggest asset is your talent base — don’t blow it by agreeing to a shady practice that will eventually hurt you. Don’t forget, you need a good supply as much as you need an ongoing demand.

–agc

Separately, the two companies also announced at the 2019 CES Show that they signed a Memorandum of Understanding (MoU) with

Separately, the two companies also announced at the 2019 CES Show that they signed a Memorandum of Understanding (MoU) with

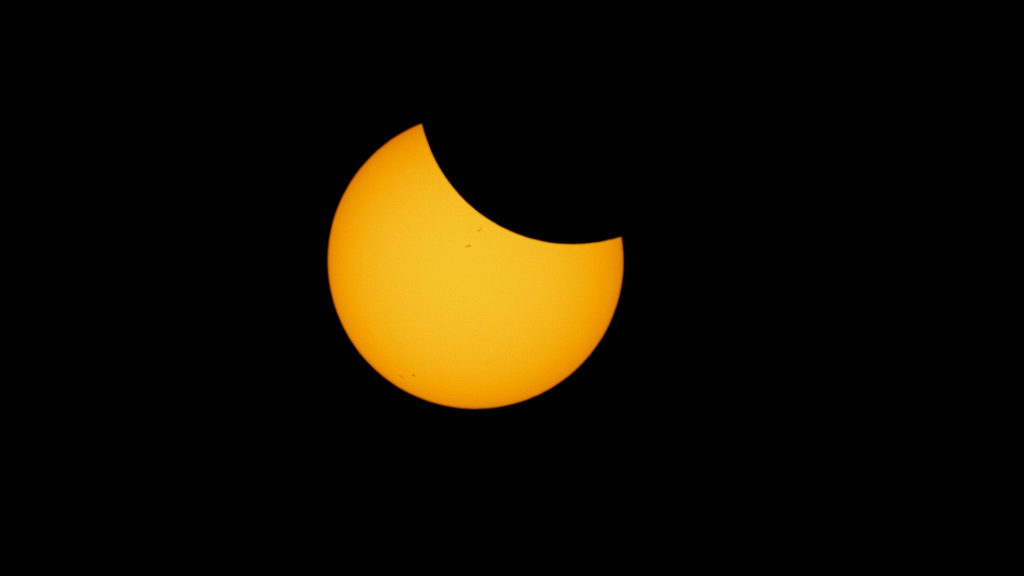

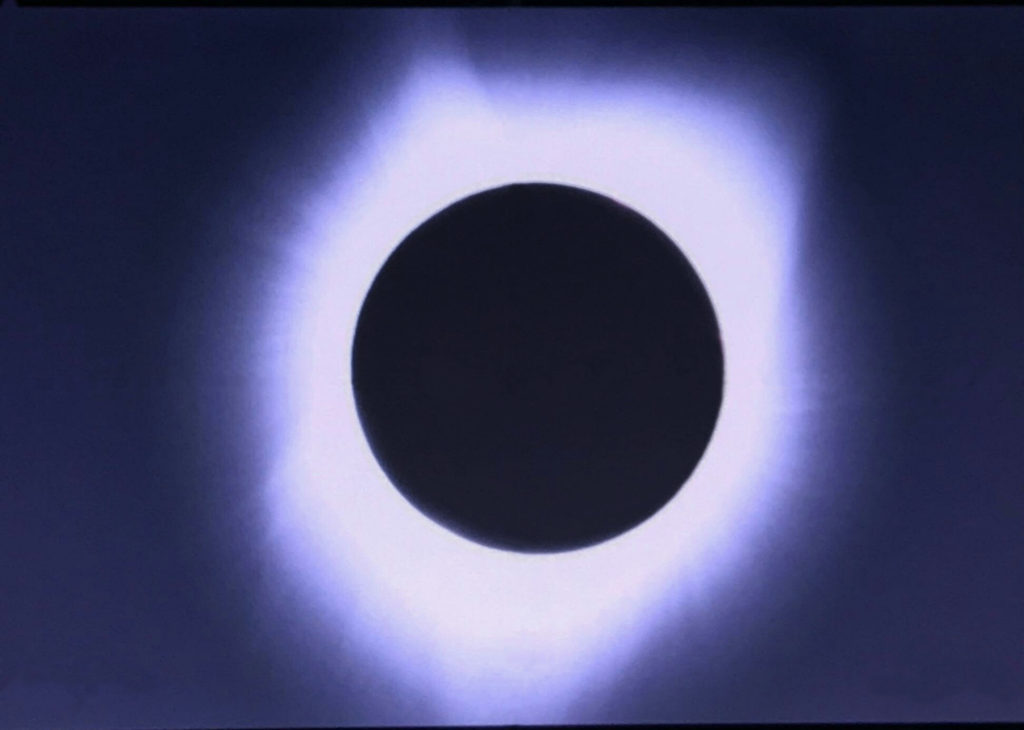

Bowing to the awesome spectacle that is a

Bowing to the awesome spectacle that is a

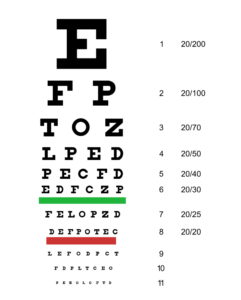

So what is the practical viewing distance for a display? People with “20/20” vision have a visual acuity that can resolve 60 features per degree, or 30 cycles per degree. From this, we can calculate that the “optimum” distance from which to observe a 1080-line display is about 3.2 times the picture height, where the vertical viewing angle is 18 degrees. Further than that, and a person with 20/20 corrected vision can’t resolve the smallest displayed details; closer than that, and you’ll start to see individual pixels.

So what is the practical viewing distance for a display? People with “20/20” vision have a visual acuity that can resolve 60 features per degree, or 30 cycles per degree. From this, we can calculate that the “optimum” distance from which to observe a 1080-line display is about 3.2 times the picture height, where the vertical viewing angle is 18 degrees. Further than that, and a person with 20/20 corrected vision can’t resolve the smallest displayed details; closer than that, and you’ll start to see individual pixels.